matt.davis@mrc-cbu.cam.ac.uk

01223 767599

Matt leads a programme of research at the CBU on 'Adaptive processing of spoken language' including projects on these and other topics:

- Predictive processing of speech

- Oscillatory synchronisation to speech

- Individual differences in speech

Latest news: Endogenous neural oscillations shown by sustained oscillations for rhythmic intelligible speech:

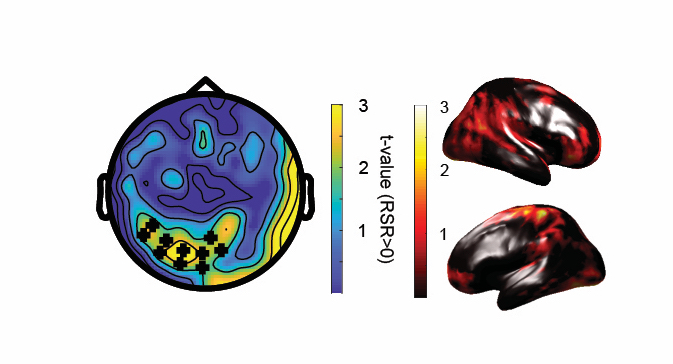

Working with Sander van Bree, Ed Sohoglu and Benedikt Zoefel we show that: (1) rate-specific oscillations in response to intelligible speech, can be measured with MEG during post-stimulus silence and are absent after unintelligible speech, (2) tACS produces rhythmic fluctuations in word report that continue to influence processing after the offset of electrical stimulation, (3) the optimal timing of tACS for word report can be predicted from independent EEG responses. In combination these observations provide compelling evidence that intelligible speech entrains endogenous neural oscillations that have a causal influence on speech perception.

You can find references for this and other recent publications online or see a full list on Scopus, Google Scholar or ORCID.

Current CBU Staff:

Current PhD Students:

Madeleine Rees (Department of Theoretical and Applied Linguistics, with Brechtje Post)

CBU collaborators:

Other Cambridge collaborators:

Thomas Cope, Department of Clinical Neurosciences

Brechtje Post, Department of Theoretical and Applied Linguistics

External Collaborators:

Helen Blank, University Medical Center Hamburg-Eppendorf, Hamburg, Germany

Sam Evans, University of Westminster, London, UK

Ev Fedorenko, Brain and Cognitive Sciences, MIT, Cambridge, MA, USA

Ingrid Johnsrude, Brain and Mind Institute, University of Western Ontario, London, Ontario, Canada

Jonathan Peelle, Department of Otolaryngology, Washington University in St. Louis, MI, USA.

Clare Press, Psychology Department, Birkbeck College, London, UK

Kathy Rastle, Department of Psychology, Royal Holloway, University of London, UK

Holly Robson, Language and Cognition, University College London, UK

Jenni Rodd Experimental Psychology, University College London, UK

Ed Sohoglu, School of Psychology, University of Sussex, Brighton, UK

Jo Taylor, Language and Cognition, University College London, UK

Benedikt Zoefel, CNRS, Centre de Recherche Cerveau et Cognition, Toulouse, France

Previous research highlights:

Blank, H., Spangenberg, M., Davis, M.H. (2018) Neural prediction errors distinguish perception and misperception of speech. Journal of Neuroscience, 38 (27) 6076-6089. CBU News Story

Zoefel, B., Archer-Boyd, A., Davis, M.H. (2018) Phase entrainment of brain oscillations causally modulates neural responses to intelligible speech Current Biology, 28(3), 401-408. CBU News Story

Sohoglu, E., Davis, M.H. (2016) Perceptual learning of degraded speech by minimizing prediction error. Proceedings of the National Academy of Sciences of the USA, 113 (12), E1747-E1756. CBU News Story.

Gagnepain, P., Henson, R.N., Davis, M.H. (2012) Temporal predictive codes for spoken words in human auditory cortex. Current Biology, 22(7), 615-621. Summary

Thanks to Berger & Wyse, and the Guardian Weekend for a summary of our research on speech comprehension at reduced levels of awareness. You can read about our previous research using brain imaging to detect residual speech processing during sedation.

Psycholinguistic "research" from the internet:

Aoccdrnig to a rscheearch at Cmabrigde Uinervtisy, it deosn't mttaer in waht oredr the ltteers in a wrod are, the olny iprmoetnt tihng is taht the frist and lsat ltteer be at the rghit pclae. The rset can be a toatl mses and you can sitll raed it wouthit porbelm. Tihs is bcuseae the huamn mnid deos not raed ervey lteter by istlef, but the wrod as a wlohe. Here's a very old page that I wrote about the problems of reading jumbled texts.

MRC Cognition and Brain Sciences Unit

MRC Cognition and Brain Sciences Unit