Deep convolutional neural net model explains IT object representational space in man and monkey

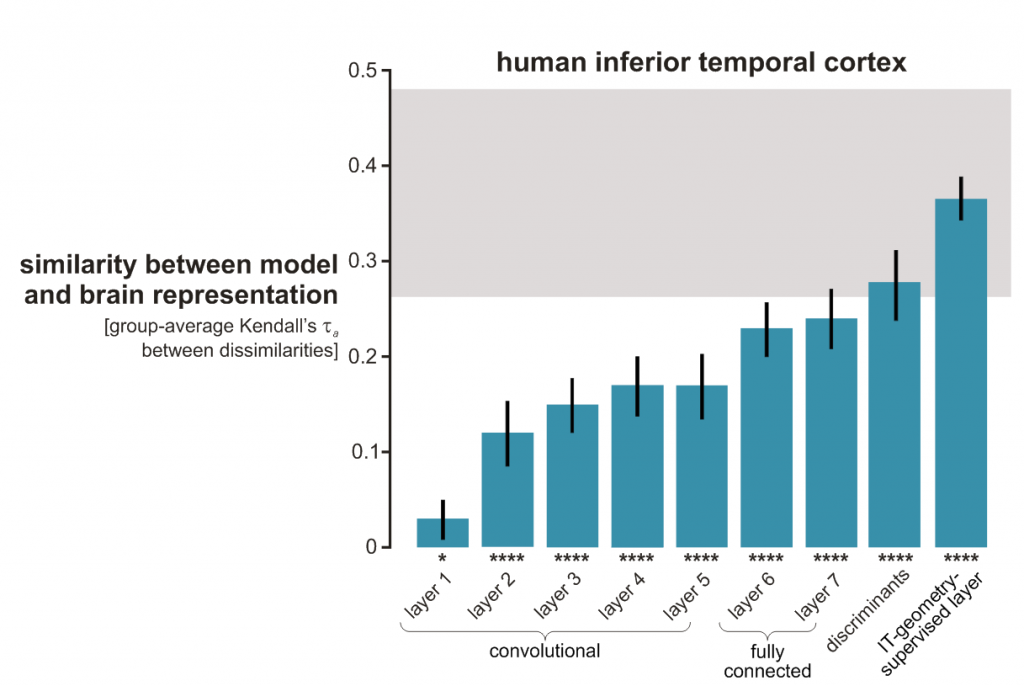

Deep neural net explains inferior temporal representations of object images. Representations in model and brain were characterised by the dissimilarity matrix of the response patterns elicited by a set of real-world object images. As we ascend the layers of the Krizhevsky et al. (2012) neural net, the representations become monotonically more similar to that of human inferior temporal (IT) cortex. When the final representational stages are linearly remixed to emphasise the same semantic dimensions as IT, the noise ceiling (gray) is reached. Similar results obtain for monkey IT.

References

Deep neural networks: a new framework for modelling biological vision and brain information processing Kriegeskorte N. (2015) Annu. Rev. Vis. Sci. 2015. 1:417-46. bioRxiv

Deep supervised, but not unsupervised, models may explain IT cortical representation Kriegeskorte N, Khaligh-Razavi SM. (2014) PLoS Computational Biology 10(11), e1003915. pdf

MRC Cognition and Brain Sciences Unit

MRC Cognition and Brain Sciences Unit