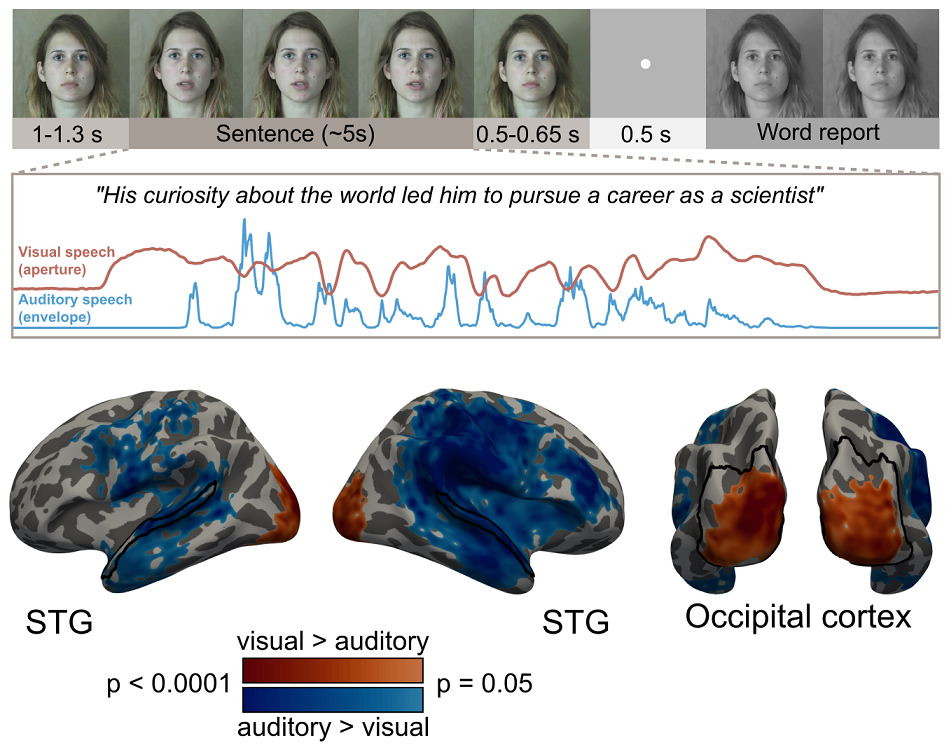

Verbal communication in noisy environments is challenging, especially for hearing-impaired individuals. Seeing facial movements of communication partners improves speech perception when auditory signals are degraded or absent. The neural mechanisms supporting lip-reading or audio-visual benefit are not fully understood.

In this study Máté Aller, Heidi Solberg Økland, Lucy J. MacGregor, Helen Blank, and Matthew H. Davis from the MRC Cognition and Brain Sciences Unit (MRC CBU), show that speech information is used differently in brain regions that respond to auditory and visual speech using MEG recordings and partial coherence analysis. While visual areas use visual speech to improve phase-locking to auditory speech signals, auditory areas do not show phase-locking to visual speech unless auditory speech is absent and visual speech is used to substitute for missing auditory signals. These findings highlight brain processes that combine visual and auditory signals to support speech understanding with implications for people with hearing impairment.

Read the full paper here: https://www.jneurosci.org/content/early/2022/06/24/JNEUROSCI.2476-21.2022

MRC Cognition and Brain Sciences Unit

MRC Cognition and Brain Sciences Unit