Whenever you perceive something in the outside world, such as someone walking toward you in the street, your brain almost instantly processes a wide range of information. Do you recognise the person? Should you approach or move away? We have to represent essential features of the world in order to act successfully. Traditionally, neuroimaging has studied how much each functional region of the brain is activated as whole during a given task. In the past decade, many studies have attempted to look into the regions and analyse the information represented in the regional patterns of brain activity. A growing number of studies have taken a further step and attempted to characterise not only the information in regional activity patterns, but the geometry of the representation, i.e. the extent to which a representation places different stimuli together (deemphasising their differences) or apart. In a new paper in Trends in Cognitive Sciences, Nikolaus Kriegeskorte and Rogier Kievit review these studies and describe how investigating the geometry of representations can reveal how we process, represent, and act on the stream of information from the outside world.

Much like we can describe different cars along various dimensions (power, speed, aesthetic appeal, price), we can use fMRI to measures hundreds of neural dimensions for each functional brain region when perceiving, for instance, a person. At the behavioural level, we can simply ask people to judge the similarity of different objects. It is then possible to examine how our subjective sense of similarity (‘How similar are these cars?’) is related to neural representational similarity. Doing so can illustrate how complex processes such as learning, memory, cognition and recognition work in the brain. We can track the transformation of representations through stages of processing, from the moment the signals from a visual image (or other stimulus) enter the brain to the moment you decide to act on it (‘That’s my friend John, I should say hello’).

Studying patterns of similarity can help relate brain representations to cognition and behaviour. Recent work reviewed in this paper has shown, for example, how visual and auditory sensory representations are transformed into semantic and perceptual representations (see Figure), how we are able to successfully recall memories, how phonemes are categorically represented in the human brain, how the brains of native speakers of English and Japanese represent different phonemes (e.g. ‘la’ and ‘ra’), and how different smells are represented.

Kriegeskorte, N. & Kievit, R. A. (2013). Representational geometry: integrating cognition, computation, and the brain. Trends in Cognitive Sciences, 17,401-412. (http://www.cell.com/trends/cognitive-sciences/abstract/S1364-6613%2813%2900127-7)

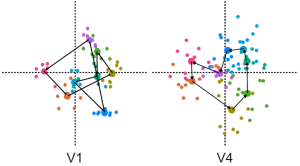

Representational geometry of colours in V1 and V4 estimated from fMRI response patterns by Brouwer & Heeger (2009). The colours presented to subjects during fMRI are arranged in 2D, such that colours eliciting similar response patterns are placed together, and colours eliciting dissimilar response patterns are placed apart. V1 represents the colours distinctly, but does not continuously reflect perceptual colour similarity (black connections link perceptually similar colours). V4 reflects perceptual colour space. This illustrates that we need to consider not merely the information present, but also the representational geometry when investigating brain representations.

Representational geometry of colours in V1 and V4 estimated from fMRI response patterns by Brouwer & Heeger (2009). The colours presented to subjects during fMRI are arranged in 2D, such that colours eliciting similar response patterns are placed together, and colours eliciting dissimilar response patterns are placed apart. V1 represents the colours distinctly, but does not continuously reflect perceptual colour similarity (black connections link perceptually similar colours). V4 reflects perceptual colour space. This illustrates that we need to consider not merely the information present, but also the representational geometry when investigating brain representations.

MRC Cognition and Brain Sciences Unit

MRC Cognition and Brain Sciences Unit